Sanjai Gangadharan, Area Vice President – South ASEAN at A10 Networks, Inc.

Kubernetes (also known as K8s), the container orchestration tool originally developed by Google, has fast become the platform of choice for deploying containerized applications in public clouds and private clouds.

For the DevOps teams, K8s provides a common platform for deploying their applications across the different cloud environments, abstracting the intricacies of the underlying cloud infrastructure, and allowing them to focus on their tasks. For organizations, this translates into flexibility to deploy the applications in the cloud that best meets the needs of customers, while optimizing costs at the same time.

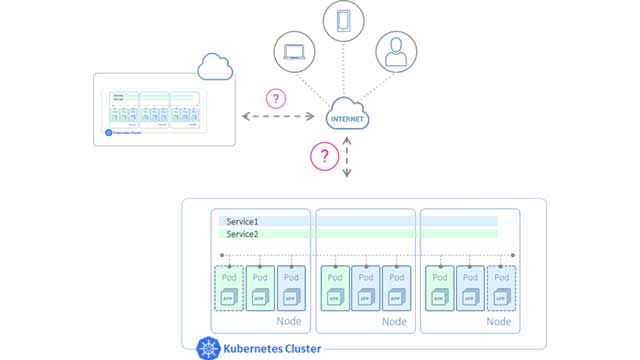

This flexibility to deploy applications in any cloud, however, creates the challenge of making them accessible to end users in a reliable and consistent manner. For example, as an application is moved from a private to public cloud (such as AWS or Azure), how do you ensure the same level of accessibility, performance, reliability and security, as in the private cloud?

For making applications accessible to the end users, Kubernetes supports the following options:

NodePort: In this, a port is allocated on each node (known as NodePort) and the end users can access the application at the node’s IP address and port value. With this option, you have to manually configure a load balancer to distribute traffic among the nodes.

LoadBalancer: Like NodePort, this option allocates a port on each node and additionally connects to an external load balancer. This option requires integration with the underlying cloud provider infrastructure and hence, is typically used with public cloud providers that have such an integration. This tight integration, however, makes moving an application from one cloud provider to another difficult and error prone.

Ingress Controller: K8s defines an Ingress Controller that can be used to route HTTP and HTTPS traffic to applications running inside the cluster. An Ingress Controller, however, does not do away with the requirement of an external load balancer. As in the case of the load balancer, each public cloud provider has its own Ingress Controller that works in conjunction with its own load balancer. For example, Azure’s AKS Application Gateway Ingress Controller is an Ingress Controller that works in conjunction with the Azure Application Gateway. This again makes the access solution specific to a cloud deployment.

Clearly, none of the above options provide a truly cloud-agnostic solution. Also, while using a cloud provider’s custom load balancing or Ingress Controller solution may be quick and easy in the short term, overall, it increases the management complexity and inhibits automation as you now have to deal with multiple different solutions.

A desired solution would have the following key attributes:

Cloud-agnostic: The solution should work in both public and private clouds. This means it should be available in different form factors (such as virtual, physical and container), so that it can be deployed in a form that is optimal for that environment.

Dynamic configuration of load balancer: The solution should be able to dynamically configure the load balancer to route traffic to the Pods running inside the Kubernetes cluster as the Pods are created and scaled up/down.

Support automation tools: The solution should support automation tools for integration into existing DevOps processes such as CI/CD pipelines.

Centralized visibility and analytics: The solution should provide centralized visibility and analytics. This would enable proactive troubleshooting, fast root-cause analysis, leading to a higher application uptime.

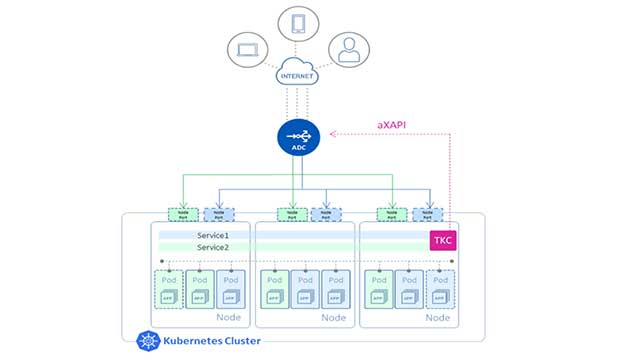

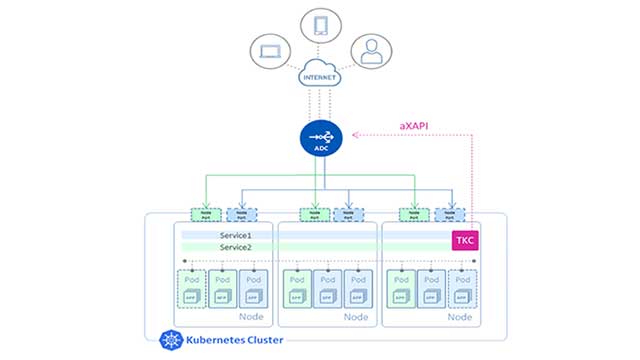

A10 provides such a solution using its Thunder® ADC along with the Thunder Kubernetes Connector (TKC):

Thunder ADC: Thunder ADC comes in multiple form factors, such as virtual, physical and container, which means the solution can be deployed in both multi-cloud and hybrid-cloud environments. It sits outside the Kubernetes cluster and load balances traffic destined to the applications running inside the cluster. For this to work, the Thunder ADC needs to be configured, and this is done by the TKC.

TKC: The TKC runs inside the Kubernetes cluster as a container and automatically configures the Thunder ADC as the Pods are created and scaled up/down.

The TKC takes as input an Ingress Resource file. This file contains SLB parameters that are to be configured on the Thunder ADC, such as virtual IP (VIP), the protocol (e.g., http) and port number (e.g., 80), which the Thunder ADC will listen for user requests, and the name of the service-group that will contain the list of nodes to which the Thunder ADC will forward the traffic.

The Ingress Resource file also specifies the service within the Kubernetes cluster for which SLB configuration is to be done on the Thunder ADC. The TKC will monitor the K8s API server (kube-apiserver) for changes to this service as the corresponding Pods are created or scaled up/down and keep track of the nodes on which these Pods are running. It will then make an aXAPI (REST API) call to the Thunder ADC and automatically configure these nodes as members of the service-group on the Thunder ADC.

This greatly simplifies and automates the process of configuring the Thunder ADC as new services are deployed within the K8s cluster. It also reduces the learning curve for DevOps engineers as they can specify SLB configuration parameters using Ingress Resource file, instead of spending time learning the configuration commands for the Thunder ADC.

For sending traffic to the Pods, the A10 solution support two major modes of operation:

Load balancing at node level: In this mode, the Thunder ADC sends traffic to the NodePort allocated on each node for the application. Upon reaching the node, the traffic will then be internally load balanced by the K8s cluster and sent to the Pods. In this method, the load balancing works at node level and not at the Pod level. This means that if one node has 10 Pods, and another has 20 Pods, traffic will still be distributed equally between the two nodes (assuming round-robin load balancing), leading to unequal distribution of traffic at the Pod level.

Load balancing at Pod level: In an upcoming release, the TKC will be able to program the Thunder ADC to send traffic directly to the Pods, bypassing the internal load balancing mechanism of the Kubernetes cluster. This is achieved using IP in IP tunnels to the Pod network on each node. In this mode, load balancing is done at the Pod level, thereby ensuring a balanced distribution of traffic among the Pods (assuming round-robin load balancing). This option, however, requires that the Container Network Interface (CNI) plugin used with the K8s cluster supports advertising of the Pod subnet (e.g., Calico CNI plugin).

In addition to automatic configuration of the Thunder ADC by TKC, the A10 solution offers the following additional benefits:

In addition to automatic configuration of the Thunder ADC by TKC, the A10 solution offers the following additional benefits:

Support for automation tools: The A10 solution can be easily integrated into existing DevOps processes through its support for automation tools such Terraform and Ansible.

Support for L4 and L7 load balancing: With A10’s solution, you have the flexibility to do both L4 and L7 load balancing as per the requirements. This is unlike Ingress Controller solutions that support L7 (HTTP/HTTPS) routing only.

High-performance traffic management: Thunder ADC provides enterprise-grade traffic management policies, including header rewrite, bandwidth or application rate-limiting, and a list of TLS ciphers for security policies.

Centralized policies: With all application traffic passing through the Thunder ADC, it provides a central point to apply and enforce polices related to security or any business needs in general.

Centralized analytics: When deployed along with the A10 Harmony Controller®, DevOps/NetOps teams can get centralized traffic analytics for easier and faster troubleshooting, leading to a more consistent uptime and customer satisfaction. The A10 solution also supports open-source monitoring tools such Prometheus/Grafana, allowing teams that use these tools to continue doing so.

Flexible licensing: With A10’s FlexPool, a software subscription model, organizations have the flexibility to allocate and distribute capacity across multiple sites as their business and application needs change.